Recently, a research on automatic Very Long Baseline Interferometry (VLBI) imaging and analysis pipeline - SAND and its related algorithms have been published in Monthly Notices of the Royal Astronomical Society (MNRAS 473 450 2018). The programme was led by Dr. Ming Zhang, a member of the galaxies and cosmology group at Xinjiang Astronomical Observatory, Chinese Academy of Sciences, in cooperation with Laboratoire d'Astrophysique de Universite Bordeaux-I on data testing.

Due to the human inference in conventional VLBI data reduction methods, the subjective parameter choices lead to divergent results in data reductions, which make the cross-analysis impossible for heterogeneous data sets. Hence, it becomes an inevitable demand to develop an automatic objective processing method for massive radio interferometry data reduction. SAND pipeline utilises the Obit Talk interface developed by National Radio Astronomy Observatory (NRAO) and the power of scientific computing of Python language to formulate and automate the determination of process parameters as much as possible. This effectively converts the AIPS procedures in the VLBI data processing package into Python modules, which facilitates the batch processing in data reduction and possible re-development of the modules for radio astronomers. The source extraction is carried out in the image plane, while deconvolution and model fitting are performed in both the image plane and the visibility plane for parallel comparison. The output from the pipeline contains multi-dimensional physical information, such as catalogues of CLEANed images and reconstructed models, polarization maps, proper motion estimates, core light curves and multiband multi-epoch correlations and spectra.

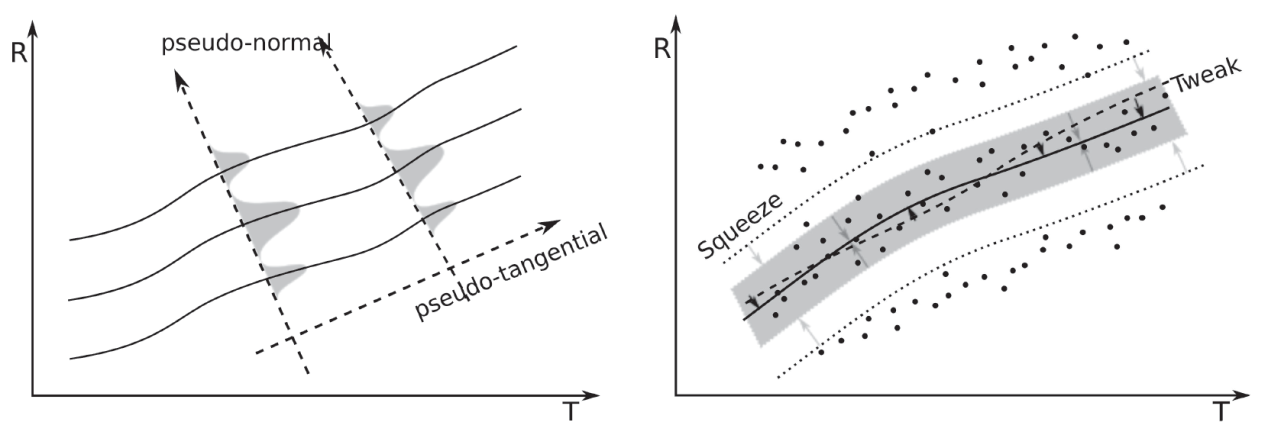

As an innovation in the SAND pipeline, a regression STRIP algorithm, analogous to the deconvolution algorithm CLEAN in radio interferometry imaging, has been proposed in this paper as a method to automatically detect the linear or non-linear trajectories of proper motions of the radio jet components. Different to the pure linear discriminant analysis, this algorithm seeks the most significant pseudo-normal distribution of the sectioned samples along the local pseudo-tangential direction, through an iteration process of 'squeeze-'n'-tweak', and obtains the converged the linear or non-linear patterns of the trajectories of component proper motions, then accomplishes the recognization, detection and stripping of individual proper motion patterns. This algorithm provides an objective method to match the jet components in different epochs and determine their proper motions, which avoid the anomaly of superluminal motion estimated with mismatched components in manual data reduction. Meanwhile, the confusion limit of the algorithm is determined through simulations with both the degree of separation and the curvature in this paper.

Article Link: http://adsabs.harvard.edu/abs/2018MNRAS.473.4505Z

Squeeze-'n'-tweak effect (sqeeze-n-tweak.png)